Gemini 3 vs. Claude 4.5: The No Hype, Real World Showdown

If your Twitter feed is anything like ours, it has been an absolute warzone lately. Every 48 hours, a new benchmark drops claiming "AGI is basically here," or "Model X just destroyed Model Y."

It is exhausting, isn't it?

As founders and developers building CodeDesign.ai, we honestly do not care about the synthetic benchmarks. We do not care about how well an AI can pass the Bar Exam (unless our lawyers are reading this—hi guys). We care about shipping.

We care about that specific panic at 2:00 AM when the API is throwing a 500 error, the coffee pot is empty, and you just need an answer that works. We care about refactoring that terrifying legacy function without taking down the entire production environment.

So, when Gemini 3 (Google) and Claude 4.5 (Anthropic) dropped their latest previews, we did not just read the spec sheets. We moved in with them. We adopted them into our daily workflow for a solid week. We threw our messiest Python scripts, our most chaotic marketing PDFs, and our vaguest architectural questions at them.

We treated them like new hires. And just like new hires, they turned out to be completely different people.

One is the caffeine driven sprinter who clears the backlog before you have even finished your morning standup. The other is the thoughtful Senior Engineer who stops you from pushing broken code because they "have a bad feeling about it."

Here is the unfiltered, deep dive truth about which one belongs in your IDE in 2025.

The Vibe Check: It Is All About Personality

Before we throw numbers at you, we need to talk about how these tools feel. It sounds unscientific, but when you are pair programming with an AI for eight hours a day, its personality dictates your entire flow state.

Gemini 3: The Silicon Valley Sprinter 🏃♂️

Gemini 3 feels like it is vibrating with energy. It is fast. Not just "token generation" fast, but "I already finished your sentence" fast. It feels incredibly eager to please.

If you ask Gemini to do something, it jumps straight into execution mode. It does not ask many questions; it just tries to solve the problem immediately.

- The Vibe: It is like that brilliant Junior Engineer who drinks four espressos, wears a hoodie, and hacks together a working prototype in record time. It might break next week, but it works right now, and sometimes that is all that matters.

Claude 4.5: The Senior Architect 🧠

Claude 4.5 has a completely different presence. It feels deliberate. When you send a prompt, there is a palpable (though digital) pause where it seems to be actually thinking. It often prefaces answers with context or warnings. It cares deeply about why you are doing something, not just how.

- The Vibe: It is the Staff Engineer who sits back in their chair, listens to your frantic explanation, and then calmly points out the one edge case that would have crashed the entire system if you had not caught it.

Round 1: The Coding Gauntlet 💻

For our team, this is the only metric that truly counts. Can it code? And more importantly, can it code safely?

We set up a clean VS Code environment running Python 3.11 and threw three distinct challenges at both models. These were not LeetCode puzzles; they were real world scenarios we face in production.

Challenge A: The "Everything is Broken" Bug Fix

The Scenario: We presented a Flask endpoint that was returning a generic 500 Internal Server Error whenever a specific header was missing. This is a classic "silent failure" that drives developers crazy. We asked the models to fix it to return a 400 Bad Request with a JSON error and keep the logging intact.

Gemini 3’s Move:

Gemini did not hesitate. It analyzed the traceback and provided a compact patch in 48 seconds. It did not just fix the specific line; it added a neat little helper function to validate headers in the future and suggested a slightly better log format.

- The Result: It worked on the first try. It was efficient, clean, and Pythonic.

Claude 4.5’s Move:

Claude took longer—63 seconds. But when we looked at the code, we saw why. Claude did not just check if the header was missing; it checked if the header was empty or contained invalid characters. It wrote "defensive code."

- The Result: It also worked on the first try, but it was robust against future errors we had not even described in the prompt.

The Takeaway: Gemini solves the bug you have. Claude solves the bug you have, plus the three bugs you were about to have next week.

Challenge B: The Refactor from Hell

The Scenario: We fed the models a messy, 8 step Pandas data pipeline. It was functional but ugly—chained methods, no comments, and logic that was impossible to read. We asked them to refactor it into 4 clear steps.

Gemini 3:

It did a decent job collapsing the steps. The code was shorter and cleaner. However, during our unit tests, the Gemini version failed on one specific edge case: NaN (Not a Number) values in the dataset. It assumed the data was clean. We had to prompt it again: "Hey, what about null values?" before it fixed it.

Claude 4.5:

This was Claude's "mic drop" moment. On the very first run, Claude produced a beautiful pipeline. It added comments explaining why it grouped certain operations. Crucially, it automatically added a .fillna() step, anticipating that real world data is never clean. It even suggested a tiny benchmark optimization at the end.

The Takeaway: In complex logic, Claude’s "world model" seems to include a better understanding of reality (i.e., data is always messy).

Challenge C: The "Find My Blind Spots" Test

The Scenario: We asked the models to write property based tests (using the Hypothesis library) for a custom date parser we wrote.

Gemini 3:

It quickly generated the standard suite of tests: valid dates, invalid months, leap years. It was "good enough" for a PR review.

Claude 4.5:

Claude went into detective mode. It generated tests for leap seconds. It generated tests for negative timezone offsets. It generated tests for Unicode characters acting as date separators. It found a lurking bug in our parser that we did not even know existed.

Round 2: The Brains (Reasoning & Writing) 🧠

Code is just text. But modern development involves reading specs, analyzing screenshots, and parsing massive logs.

The "Native" Feel of Multimodal

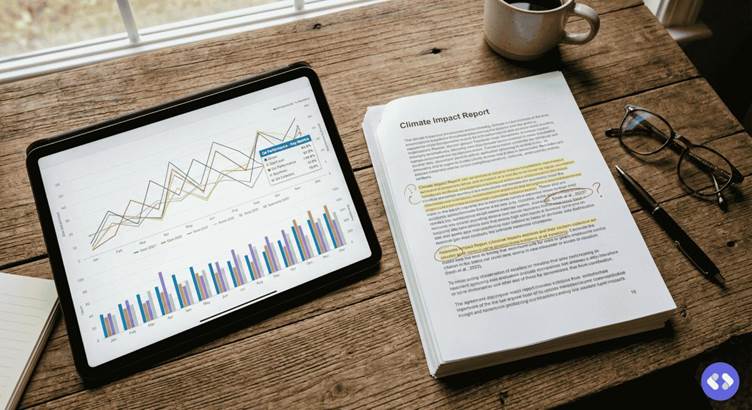

This is where Gemini 3 flexes its Google muscles. We dropped a 72 page marketing analytics PDF (10MB) into the chat.

- Gemini: Instantly accepted it. We asked for a table of KPIs with page citations. Gemini spit out a perfect markdown table, citing "Page 12" and "Page 45" accurately. It felt native, like the model was reading the file directly from the hard drive.

- Claude: It handled the PDF well, but it felt like a heavier lift. However, where Claude shined was the analysis. When we asked, "Why did the conversion rate drop in Q3?" based on the PDF data, Claude gave a nuanced answer connecting three different charts. Gemini gave a summary of the charts but missed the causal link.

The Hallucination Problem

We asked both models to draft a 1,000 word research brief on "The Future of Serverless Architecture," complete with citations.

- Claude 4.5: This is the writer's choice. The essay flowed. It had a thesis statement, body paragraphs that transitioned logically, and a strong conclusion. Most importantly, when we asked for sources, Claude linked to real papers. When it could not find a source for a specific claim, it said, "I cannot verify this specific statistic, so I have omitted it."

- Gemini 3: It wrote a very structured, bullet point heavy brief. It was easy to scan. However, we caught it hallucinating a citation once. It referenced a "2024 AWS Whitepaper" that did not exist. It was a convincing lie, but a lie nonetheless.

The Takeaway: If you are using AI to write content for your startup (like we do at CodeDesign.ai), Claude is the safer bet for accuracy. Gemini is great for outlines and rough drafts, but you need to act as the Fact Checking Department.

Round 3: The Ecosystem & Workflow 🛠️

You do not use a model in a vacuum; you use it to get work done.

The Google Integration (Gemini's Superpower)

If your startup runs on Google Workspace (Docs, Sheets, Drive), Gemini 3 is basically a cheat code. The inline grounding allows you to say, "Draft a project timeline based on that meeting transcript in my Drive from last Tuesday."

It pulls the context effortlessly. For operational tasks—cleaning up a CRM spreadsheet, summarizing meeting notes, drafting emails—Gemini is unbeatable because it lives where your data lives.

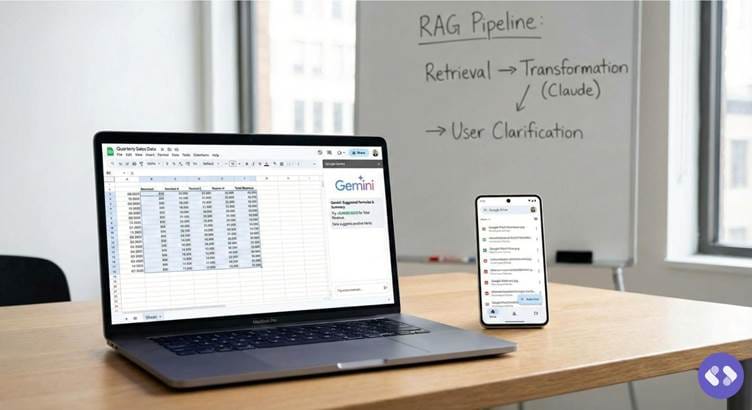

The Agentic Workflow (Claude's Superpower)

Claude 4.5 excels when you treat it as an "Agent." In our tests using RAG (Retrieval Augmented Generation) pipelines, Claude was much better at maintaining "state."

If you give it a complex instruction like: "Search for X, then transform the data using format Y, and if Z is missing, ask the user for clarification," Claude follows that flowchart religiously. Gemini sometimes tries to skip steps to get to the answer faster.

⚔️ The Cheat Sheet: For the Skimmers

We know you are busy. Here is the "Too Long; Did Not Read" summary for your team Slack channel.

|

Feature |

⚡ Gemini 3 (Google) |

🧠 Claude 4.5

(Anthropic) |

The Verdict |

|

Core Vibe |

The Sprinter. High energy, eager, fast. |

The Architect. Thoughtful, deliberate, careful. |

N/A |

|

Coding Style |

Functional. Great for quick scripts, one off

patches, and standard boilerplate. |

Defensive. Writes robust code with error handling,

comments, and edge case checks. |

Claude for Production; Gemini for

Prototyping. |

|

Reasoning |

Good, but confident. Occasionally makes up sources if

pushed too hard. |

Excellent. "Doubts itself" appropriately and

admits ignorance. |

Claude (Significantly). |

|

Speed |

Blazing. Consistently returns output 15-20% faster

on short prompts. |

Deliberate. Takes a moment to "think,"

especially on complex prompts. |

Gemini wins the race. |

|

Context Window |

Massive (1M+ tokens). Handles huge inputs loosely but

quickly. |

Large (200k+). Handles large inputs with higher recall

accuracy. |

Claude for precision; Gemini for volume. |

|

Ecosystem |

Native Google Integration. Docs, Sheets, Drive—it

lives where you work. |

Standalone Powerhouse. Best used via API or

Console. |

Gemini (if you use Workspace). |

|

Tone |

Enthusiastic, sometimes verbose, helpful. |

Professional, concise, "Senior Engineer" vibes. |

Claude (for professional clarity). |

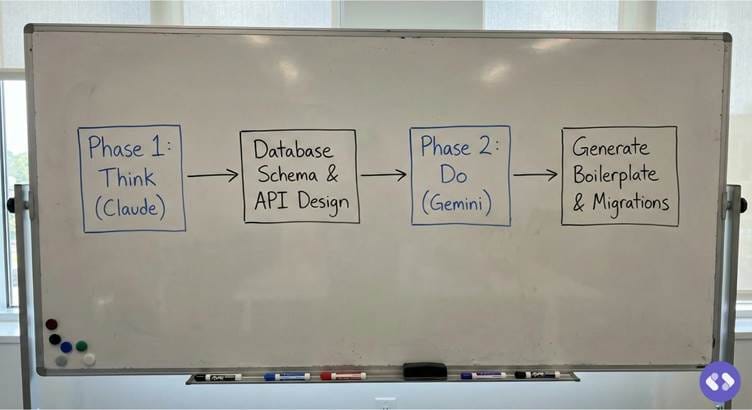

The "CodeDesign Strategy": How We Use Both 🚀

After a week of testing, we realized that picking a "winner" is the wrong mindset. In 2025, the best developers are "Model Agnostic." They know which tool to pull from the belt.

Here is the exact workflow we have adopted at CodeDesign.ai:

Phase 1: The "Think" Phase (Claude 4.5)

We start every major feature with Claude.

- "Here are the requirements. Act as a System Architect. Design the database schema, outline the API endpoints, and list the potential security risks."

- "Review this legacy function. Explain what it does and refactor it for readability."

Claude is our safety net. We trust its reasoning capabilities to set the foundation.

Phase 2: The "Do" Phase (Gemini 3)

Once the plan is set, we switch to Gemini to get it done fast.

- "Generate the SQL migration file for this schema."

- "Write the 50 lines of boilerplate code for these three endpoints."

- "Take this JSON error log and tell me which line is broken."

Gemini is our engine. It saves us hours of typing and looking up syntax.

Final Verdict: The "Daily Driver" 🏁

If you forced us to pick only one subscription for a desert island coding bootcamp?

We would pick Claude 4.5.

Why? Because in software development, the cost of a bug is always higher than the cost of waiting an extra 15 seconds for an answer. Claude's defensive coding style and ability to catch logic errors save us from "cleanup duty" later on. It feels like a force multiplier for a senior developer's brain.

But—and this is a big but—if your day involves 50% coding and 50% emails, documents, and spreadsheets, Gemini 3 is the productivity unlock you have been waiting for. The Google integration is simply too good to ignore for general business tasks.

The smart move? Use the free tiers or previews of both until you feel the difference yourself. Your brain works differently than ours. Try them on your real tasks for 48 hours. You will feel which one argues less with your workflow. That is your winner.

Building something cool should not be a headache. Whether you are a Claude fan or a Gemini loyalist, CodeDesign.ai helps you turn those AI generated ideas into stunning, functional websites in minutes. Stop wrestling with div tags and start shipping.