The Ultimate Guide to the Top Large Language Models in 2025

- What is your brand name?

- What kind of business you are in?

- What kind of products or services you offer?

Introduction

In the world of Artificial Intelligence, 2025 has been a year of unprecedented acceleration. Large Language Models (LLMs) are evolving at a breakneck pace, with capabilities expanding faster than ever. Models that were considered state-of-the-art just last year have already been succeeded by more powerful, intelligent, and versatile successors. If you're looking to leverage the best AI for your project in late 2025, you need a guide to the absolute cutting edge.

This guide cuts through the noise to focus on the top-tier, trending LLMs that are defining the next era of AI. We'll compare them on what matters now: agentic reasoning, multimodal fluency, massive context handling, and real-world performance.

What Are LLMs and Why Are They So Important?

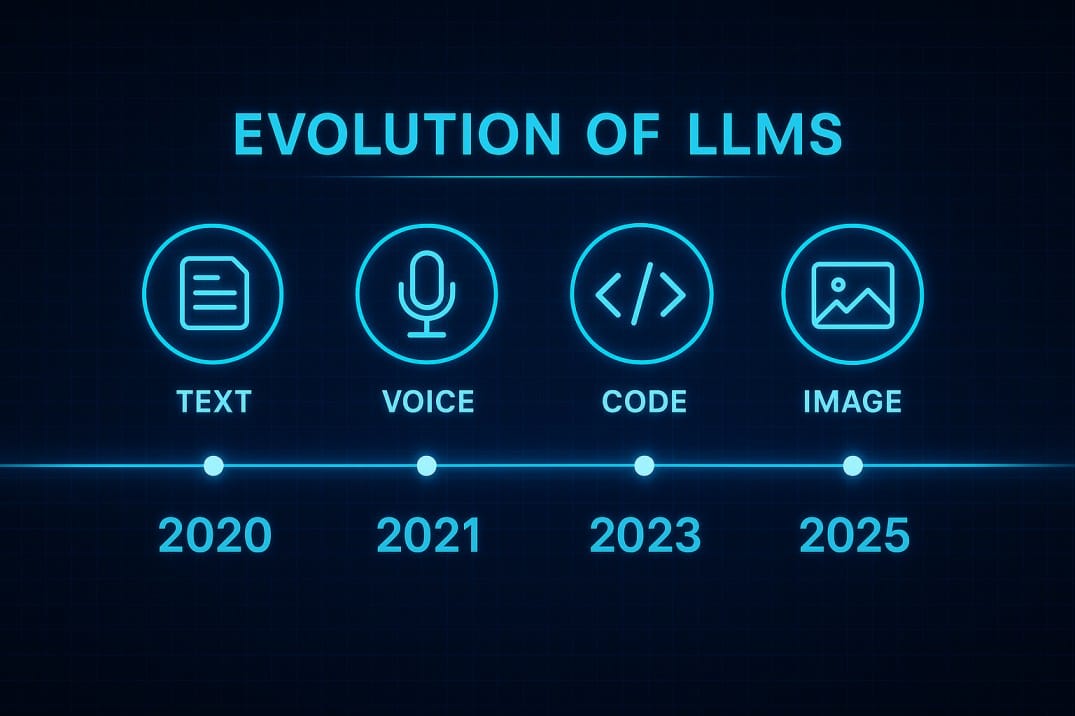

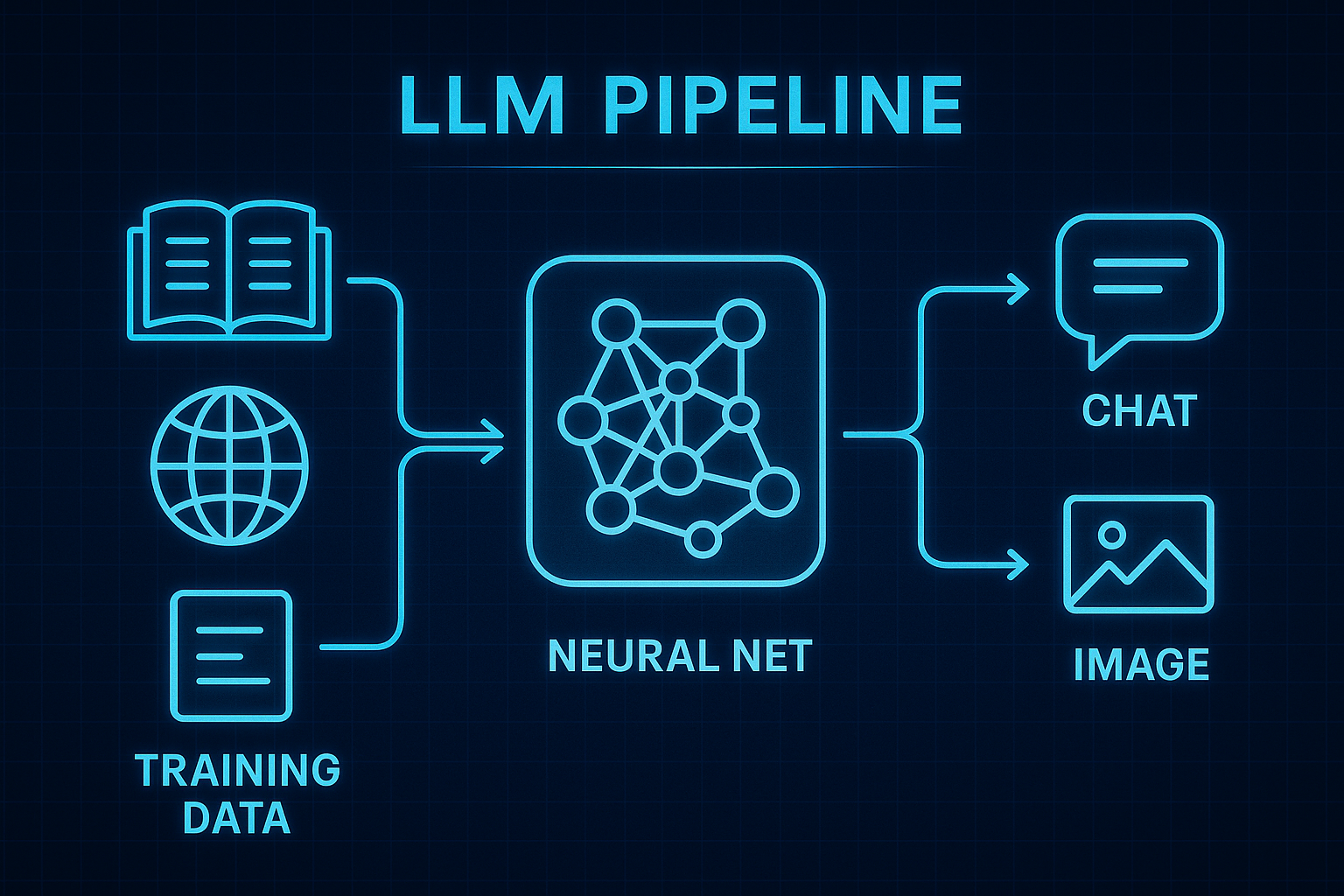

LLMs are a type of artificial intelligence trained to understand and generate human-like text by learning from vast datasets. They are the engines behind the most advanced AI applications today.

As of late 2025, the key trends have matured into industry standards:

- Agentic AI & Tool Orchestration: The best models don't just answer questions; they act. They can use tools, run code, and orchestrate complex, multi-step workflows to accomplish goals autonomously.

- The 2 Million Token Standard: Context windows have ballooned, allowing models to process and recall information from entire books, codebases, or hours of video in a single go.

- Deep Multimodality: True multimodal understanding is here. The leading models can natively process and reason across text, images, audio, and video streams simultaneously.

- The Open-Source Power Play: Open-weight models are no longer just "alternatives." The latest releases from the open-source community are directly competing with—and in some cases, outperforming—the best proprietary systems.

Let's dive into the models that are making it all happen.

Top Trending LLMs in 2025

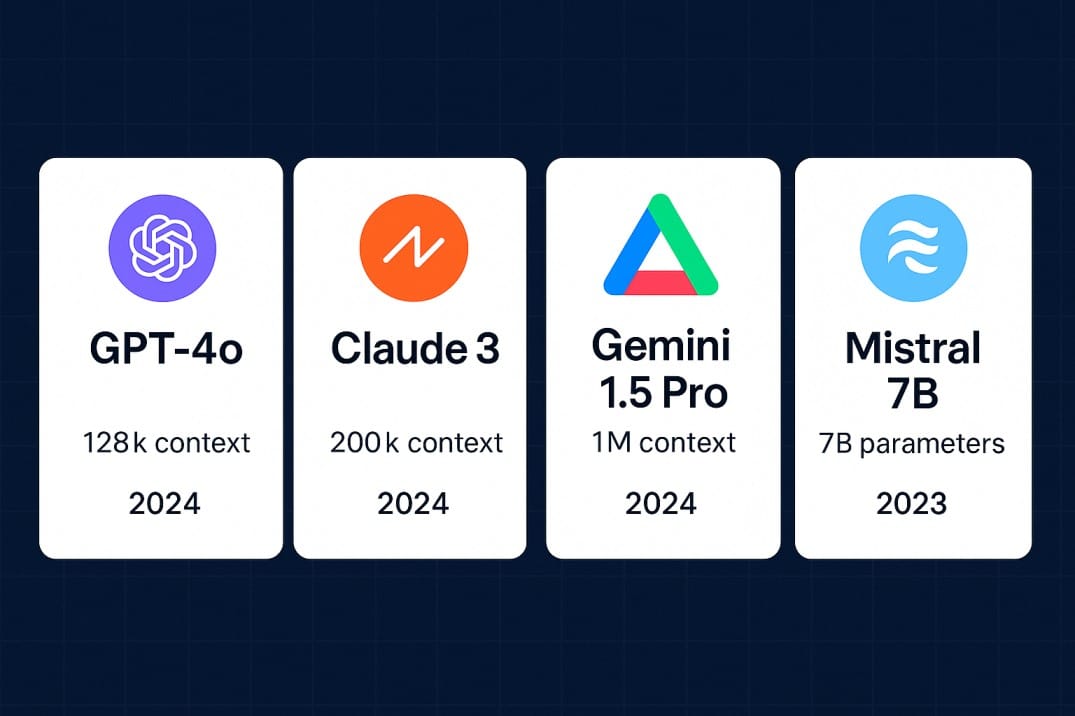

1. GPT-5 (OpenAI)

- Release Year: 2025

- Context Length: 400,000 tokens

- Multimodal: Yes (Text, Image, Audio, Video)

- Why it matters: The new benchmark for complex reasoning and agentic workflows. GPT-5 unifies OpenAI's most advanced capabilities into a single, cohesive model. It excels at multi-step problem-solving, from debugging entire applications to performing layered business analysis, making it the top choice for building sophisticated AI agents.

2.Claude 4 Opus (Anthropic)

- Release Year: 2025

- Context Length: 200,000+ tokens

- Multimodal: Yes (Text, Image, Code Execution)

- Why it matters: State-of-the-art intelligence with a focus on enterprise-grade safety and coding. Claude 4 Opus has set a new standard for performance on complex coding benchmarks. Its ability to perform sustained, multi-hour agentic tasks and execute code makes it a powerhouse for professional developers and large-scale enterprise deployments where reliability is paramount.

3. Gemini 2.5 Pro (Google DeepMind)

- Release Year: 2025

- Context Length: 2 million tokens

- Multimodal: Yes (Text, Image, Audio, Video)

- Why it matters: Unrivaled context capacity and deep multimodal understanding. With a production-ready 2 million token context window, Gemini 2.5 Pro can ingest and reason over more data than any other model. It is the premier choice for applications that require deep analysis of vast, mixed-media datasets, such as video analysis, full repository code reviews, and large-scale document intelligence.

4. Command R+ (Cohere)

- Release Year: 2024

- Context Length: 128,000 tokens

- Multimodal: No

- Why it matters: Still the industry leader for grounded, accurate RAG. While newer models have emerged, Command R+ remains the best-in-class model specifically optimized for Retrieval-Augmented Generation. For enterprise applications built on private knowledge bases (e.g., using software like Bloomfire) that require verifiable, cited answers, it remains the top trending and most reliable choice.

5. LLaMA 4 (Meta)

- Release Year: 2025

- Context Length: Up to 10 million tokens (Scout model)

- Multimodal: Yes (Text, Image)

- Why it matters: The pinnacle of open-weight AI, now with a Mixture-of-Experts (MoE) architecture. Llama 4 is not just one model but a family of them, offering unparalleled performance and efficiency that seriously challenge proprietary systems. It's the definitive choice for those who need maximum control, customization, and the ability to self-host a truly frontier-level model.

Feature Comparison: Performance, Speed, and Use Cases

Performance and Speed Overview

| Model | Performance | Primary Strength | Key Feature |

| GPT-5 | Frontier | Agentic Reasoning | Unified, multi-step task execution |

| Claude 4 Opus | Frontier | Enterprise & Coding | State-of-the-art coding benchmarks |

| Gemini 2.5 Pro | Frontier | Massive Context | 2 million token window, deep multimodality |

| Llama 4 | High-Frontier | Open-Source Control | Mixture-of-Experts, massive scale |

| Command R+ | High | RAG & Grounding | Highly accurate, cited enterprise answers |

Multimodal Capability Matrix

| Model | Text | Image | Audio | Video |

| GPT-5 | Yes | Yes | Yes | Yes |

| Claude 4 Opus | Yes | Yes | No | No |

| Gemini 2.5 Pro | Yes | Yes | Yes | Yes |

| Llama 4 | Yes | Yes | No | No |

| Command R+ | Yes | No | No | No |

Use Case Recommendations

| Scenario | Recommended Models |

| Building autonomous AI agents | GPT-5, Claude 4 Opus |

| Analyzing entire code repositories or hours of video | Gemini 2.5 Pro, Llama 4 (Scout) |

| Enterprise-grade, safe applications | Claude 4 Opus, Command R+ |

| Self-hosted, custom fine-tuned solutions | Llama 4 |

| Building Q&A systems on private documents | Command R+, Claude 4 Opus |

| Real-time, complex multimodal analysis | Gemini 2.5 Pro, GPT-5 |

Pricing and Hosting

| Model | Self-Hosted | Primary Access |

| GPT-5 | No | OpenAI API |

| Claude 4 Opus | No | Anthropic API, Cloud Providers |

| Gemini 2.5 Pro | No | Google AI Platform |

| Llama 4 | Yes | Self-hosted, API Providers |

| Command R+ | No | Cohere API |

Final Thoughts: Which LLM Is Right for You?

The "best" LLM is no longer a single answer but depends entirely on your specific needs:

- Go with GPT-5 to build the most capable and intelligent AI agents that can reason and act on complex instructions.

- Choose Claude 4 Opus for best-in-class performance on coding and high-stakes enterprise tasks where safety and reliability are non-negotiable.

- Use Gemini 2.5 Pro when your application's key advantage is processing and understanding an enormous amount of multimodal information.

- Build with Llama 4 if you need the power of a frontier model with the transparency, control, and customizability of open-weights.

- Rely on Command R+ for enterprise-grade RAG applications where accuracy and grounding answers in your own data is the top priority.

Optimizing for the Future

The pace of LLM development shows no signs of slowing. As we look toward 2026, expect even more powerful agentic capabilities, a deeper integration of different modalities, and a continuing blur between the capabilities of open and closed-source models. The revolution is well underway, and with the incredible tools available today, there has never been a better time to build the future.

Want to build with AI today?

Generate full websites, landing pages, and funnels using AI. It's the no code builder of the future powered by leading LLMs.

Reference